I did some research last week for a project we are doing for a museum. I can't go into detail here as we haven't finalized the contract details yet, but I can share a little about our thought process in finding a compelling build and the technological puzzle pieces that make it work.

The basic idea of the setup is that we want to bring our ecosystem simulation Trophix as close as possible to the visitors of the museum. So no screens with mouse or game controller. Basically, we want to avoid any setup that would work better on the home laptop. Instead, we strive to use all the space the museum offers us as a canvas on which to display our simulation and for visitors to interact with.

In this case, the exhibition is divided into phases. The first phase installation is intended as a demonstration of future development. This also means that we have to work with a limited budget and space.

When visitors enter the exhibition space in the museum, they see a table on which a simplified map is projected. You will also see a number of objects that you can place and move around on this table.

The tabletop is projected from the ceiling with a projector. The objects that can be placed on the table are marked, so we can track their position and rotation in space and adjust the simulation displayed on the table in real time. This is done with a camera pointed at the table.

The obvious technical challenges we could imagine with a setup like this were:

- Visual markings can be a big problem as the lighting conditions on the table are constantly changing depending on the simulation being shown.

- How well (correct and stable) can we track the objects given the limited budget? Are a webcam and a QR code like markers enough?

- How can we realize this with the Godot Game Engine? Godot has some AR tracking capabilities, but it's mostly aimed at VR headset tracking, not generic markers.

After a quick google search it looked like Aruco markers combined with the OpenCV library might be a good idea.

I'm a big fan of separating the components of the technical systems, especially in real-time exhibition contexts. So the idea was to first check the tracking quality and then incorporate it into our Godot setup.

As I'm also a big Python fanatic, next I googled for some example Python code for Aruco marker tracking. Turns out there are some nice snippets on www.automaticaddison.com . I've rewritten most of the code, but it's always a big help to have a working reference example. In addition, I used Docker and Docker Compose to make everything work in a containerized environment and to separate the required libraries from my host Linux system.

Printing the markers turned out to be a bit of a hassle because our printer spontaneously decided to print terrifically badly. I "fixed" that by tracing washed out blacks with a permanent marker... Uuuund:

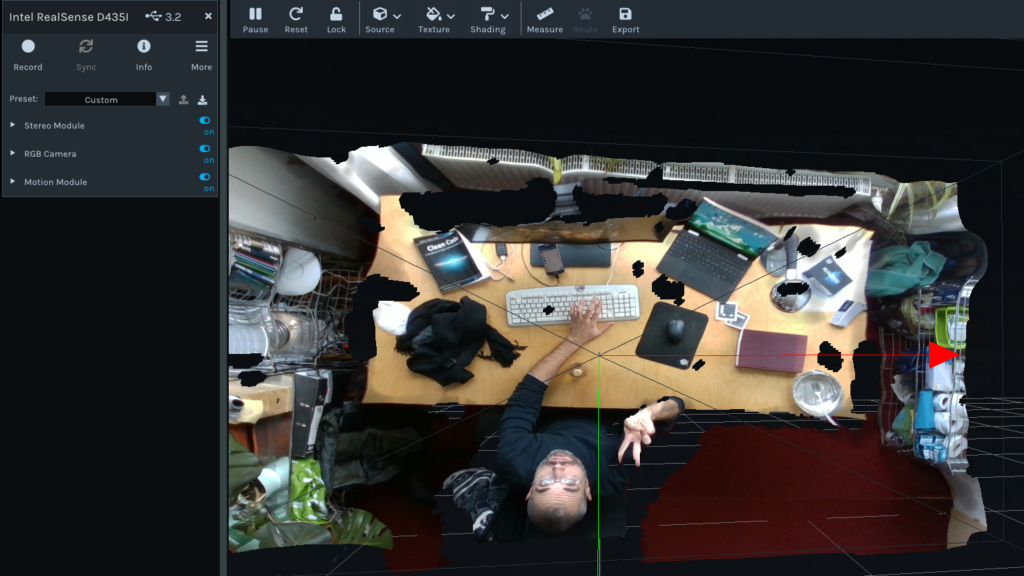

For the camera, I used an Intel Realsense we had lying around and just used their albedo camera (ignoring the depth features for the moment). At this point, I'll skip the usual hardware problems. Because: it worked after an hour of debugging v4l. Another half hour went into playing with the depth functions.

The next step was to get the data into Godot. For this I implemented a barebones websocket server in Python and a receiving GDScript websocket client in Godot. The idea was to move/rotate a few 3D objects based on the markers being tracked by simply broadcasting websocket packets locally at 60 frames per second. I've been looking at UDP networks for a few minutes, but decided not to worry too much about performance just yet.

Yay. I deactivated the rotation for the time being because the objects flickered a little too much.

What's next?

- Increasing camera resolution (probably a Gopro @ 4k) to improve tracking stability.

- Experimenting with infrared lights and cameras to separate the tracking light from the wavelength of the projector light.

That's it for today. Feel free to send me a PN on Twitter or Discord if you have any questions or comments.